Immutable Infrastructure: No SSH

2015-07-13 by Axel Fontaine

One of the things that is really exciting about Immutable Infrastructure is that it opens up a vast number of opportunities to revisit old ways and change them for the better. One of those is drift, the slow natural divergence of machines from each other and their intended setup. There are two main causes for this: deferred provisioning and updates. Both are exacerbated by time. The longer apart machines are set up and the longer they exists, the higher the likely hood to run into drift problems. Let's look at each of these in turn.

Why is drift a problem?

The first question to ask is really: why is drift itself a problem? Or to put it differently: why is having identical machines important?

One of the primary ways to reduce risk in a software system is testing. Both manual and automated testing rely on the same three step workflow:

- Put the system in a known state

- Perform an action

- Compare the results against your expectations

Putting the system in a known state does not only apply to data, it also applies to the versions of all the software components installed. Once your system is correctly set up, your tests will then validate version X of your code running on version Y of your platform while having version Z of a library on board. All other combinations are unknown and must be validated separately. In other words, there is no guarantee that the exact version of your code will work identically when combined with older or newer versions of the platform and libraries as older versions may still contain bugs and newer versions could have regressions or subtle changes.

For the investment you have made with your tests to have any value, you must therefore ensure the known state of the system and the versions of all components can be easily and safely replicated across machines and environments.

Or to put it more simply: to have any guarantee that things will work, you need to run in production what you tested in test.

The same thing of course also applies within a single environment. If you have multiple machines serving clients behind a load balancer and want to keep your sanity, you also want these to be absolutely identical.

The problems with deferred provisioning

Now you may think this is easily solved by using some solid automated provisioning mechanism. Regardless of whether you favor shell scripts or configuration management tools, this is not as easy as it seems. The problem lies in the common usage patterns of package managers and update timings in central repositories. Trouble starts the minute you start relying on commands like this:

> sudo apt-get install mypkg

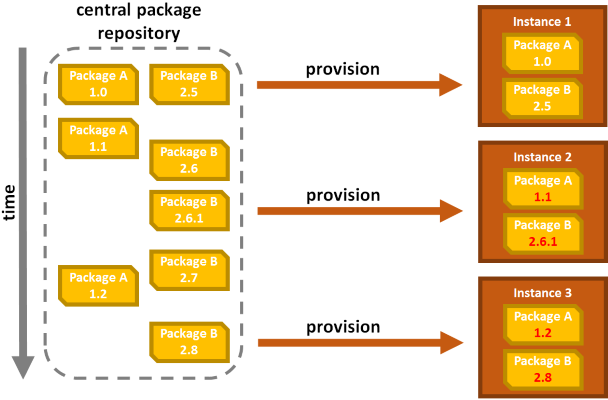

To better illustrate why this is a problem, look at this simple example of three machines being set up based on the exact same provisioning script at different points in time:

As you can see, even though all machines install Package A and Package B, the difference in provisioning time causes the same script to pull different versions of the same packages onto the various machines. You effectively couple the stability of your system to the update schedule of individual packages in the central repository!

Images to the rescue

One way to fix this is to pin all packages and all their transitive dependencies to specific versions. While this lets your regain control of the update schedule of your system, this comes with a high maintenance overhead.

A simpler and more reliable alternative is to stick to the proven mantra: build your artifact only once.

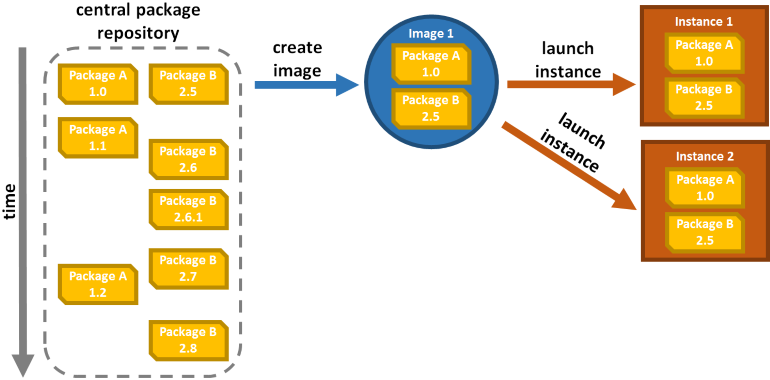

To eliminate differences (and thus potential causes of failure) between environment, your build should produce an artifact that captures your application and all its dependencies at the lowest level possible. When working with virtual infrastructure, the lowest level possible is simply an image of the entire machine. From then on identical instances can be created when you want as often as you want:

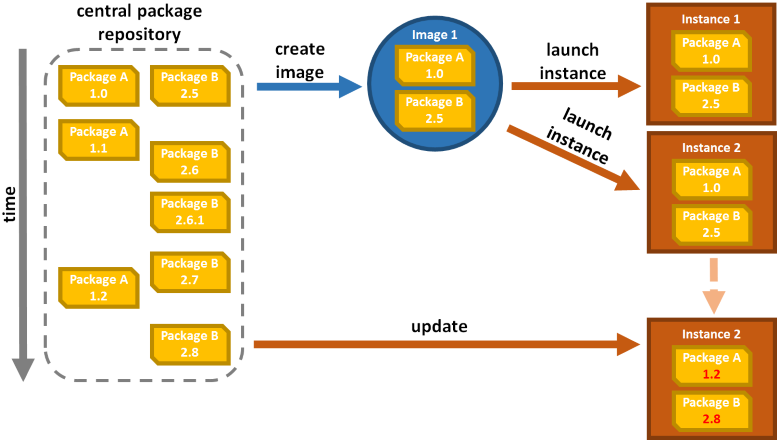

While images make it easy to create identical machines, this doesn't solve all problems. As soon as you have the possibility to log in you lose guarantee that those machines will not diverge over time:

The longer those machines those machines exists, the more likely someone will have modified something and the harder they become to recreate exactly. This effectively largely removes a lot of the benefits.

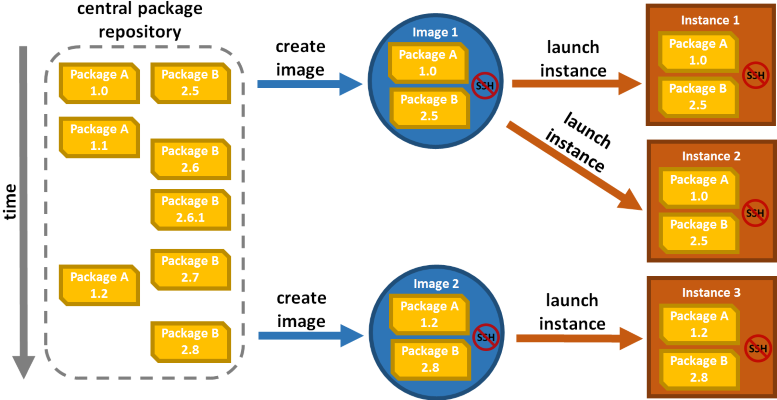

Enforcing immutability

So what can be done to remedy this? You need to prevent log in. This not only removes the drift problem. It also

has very compelling security implications by reducing your attack surface. Vulnerabilities like ShellShock

simply vanish.* And on top of that it forces you to stick to a clean image-based workflow, without hacks or

back-doors. In light of all these advantages we'd like to invite you to join us on the no SSH movement:

Your infrastructure now effectively becomes immutable. Any change requires rebuilding of a new image through your regular automated build and deployment pipeline. In turn your system drastically gains in simplicity and reliability. A pretty good deal if you ask us.

Conclusion

Drift reduces the reliability of your system by effectively undermining the guarantees you gained while testing it. Yet it very easily occurs when relying on traditional provisioning.

By moving to a build and deployment pipeline that captures your application and all of its dependencies at the lowest possible level, you can regain a lot of confidence. When dealing with virtual hardware, the best way to achieve this is to produce images of an entire system. To ensure the process stays reliable and to keep you honest you should eliminate SSH and the ability to log in. Your images and your infrastructure effectively becomes immutable. Any change triggers the creation of a new image, which is built only once for all environments.

* Update: As correctly pointed out by a few people, the ShellShock vulnerability doesn't fully vanish with this in a general-purpose operating system. This is a CloudCaptain-specific point as CloudCaptain doesn't include bash either.

Take it to 11

CloudCaptain lets you do all this and more. CloudCaptain intelligently analyses your application and generates minimal images in seconds. There is no general purpose operating system and no tedious provisioning. CloudCaptain images are lean, secure and efficient. You can run them on VirtualBox for development and deploy them unchanged and with zero downtime on AWS for test and production.

Have fun! And if you haven't already, sign up for your CloudCaptain account now. All you need is a GitHub user and you'll be up and running in no time. The CloudCaptain free plan aligns perfectly with the AWS free tier, so you can deploy your application to EC2 completely free.