Worker Apps

2016-08-04 by Axel Fontaine

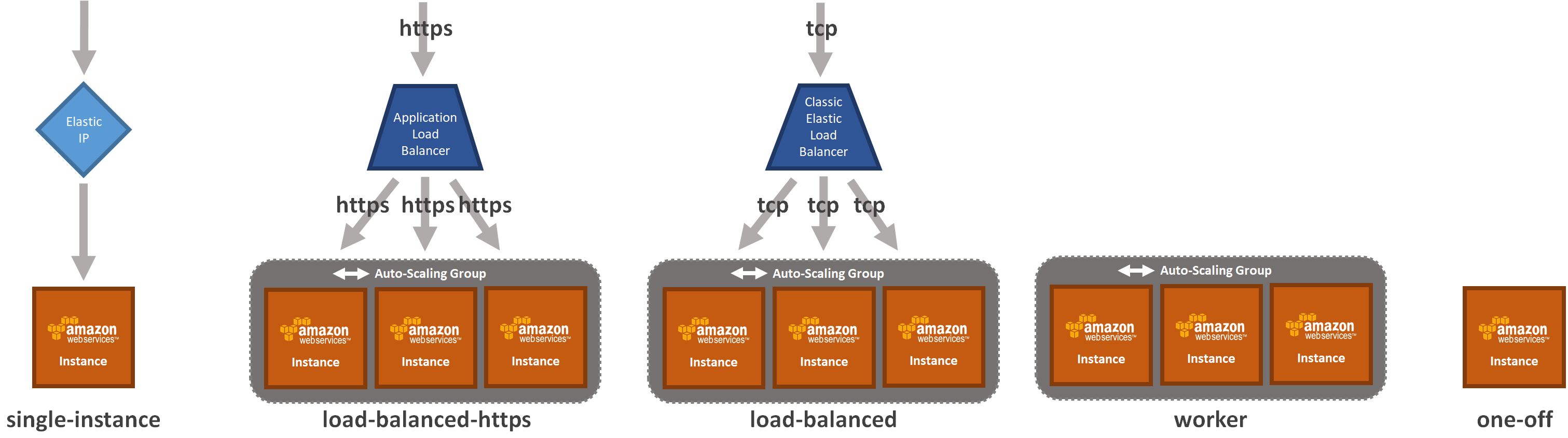

Until now CloudCaptain came with support for two types of applications: single-instance and load-balanced.

single-instance applications sit behind an Elastic IP address and are updated with zero-downtime

blue/green deployments. Even though they lack runtime redundancy, they are great for non-critical workloads

typically involving HTTP or HTTPS requests.

load-balanced applications on the other hand sit behind an Elastic Load Balancer. Not only are they

also updated with zero-downtime blue/green deployments, but they also come with runtime-redundancy, zero-downtime auto-recovery

and auto-scaling. They are a great choice for robust and demanding HTTP and HTTPS workloads.

While single-instance and load-balanced apps serve quite a lot of needs, there are times when

you simply don't need a stable entry point in your application. For those scenarios having an extra Elastic IP or ELB

simply adds unnecessary complexity and costs.

To address this, today we are introducing a third kind of application: worker apps.

Introducing Worker Apps

worker apps come with the same reliability advantages as load-balanced apps. They

are updated with zero-downtime blue/green deployments and they come with runtime-redundancy, zero-downtime auto-recovery

and auto-scaling. However, they don't have an Elastic Load Balancer in front of them.

Let's look at 3 scenarios where they are particularly useful.

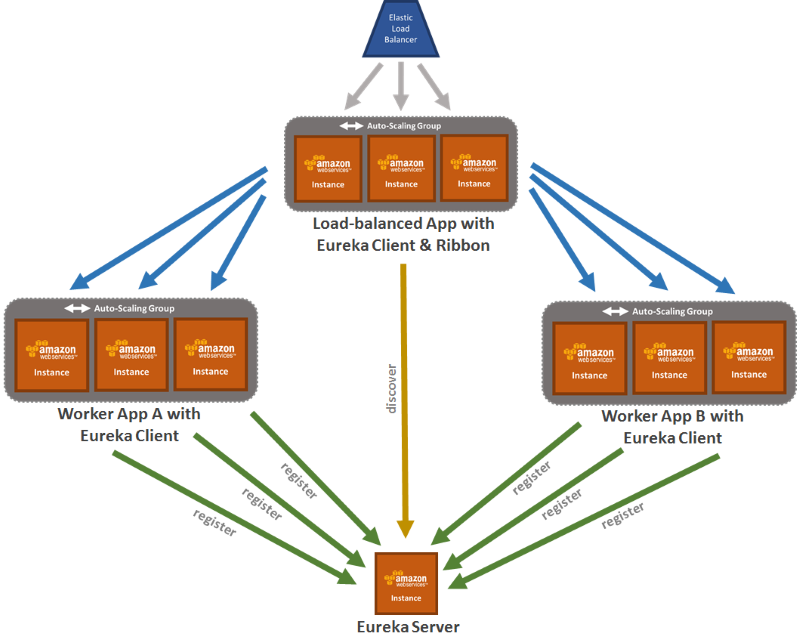

Client-side load balancing

When Netflix decided to go all-in on AWS, it was still early days for the cloud. At that time ELBs could only be internet facing, and simply weren't a great choice for internal communication between services like they are today.

Those needs sparked the creation of a number of open-source tools to address them including Eureka and Ribbon. You can think of Eureka as a central place where instances services can register themselves with, so they can be discovered by clients. Ribbon is a library that you run on the client that will then take care of load balancing calls between multiple instances of the same service that were discovered via Eureka.

To make it easier to run setups like the one above locally, we have also made changes to the way we handle networking with VirtualBox. With VirtualBox 5.1 and newer all instances in the dev environment will now receive their own unique IP address with a dedicated NAT network and will be able to talk directly to each other.

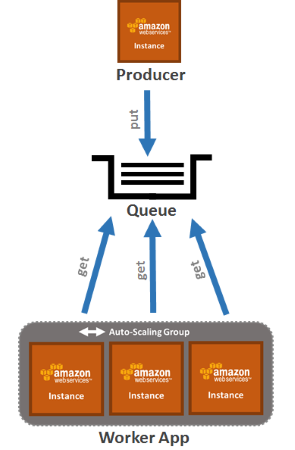

Queues

Another common scenario for worker apps is queueing. In this case services communicate asynchronously with

each other via queues. These could be SQS queues or any other async solution. worker apps can be great

message consumers and their autoscaling capabilities can be a great fit for dealing with varying loads.

Cron jobs

Finally we also have periodic tasks, or cron jobs, where the trigger is time based. Even if it is just a single instance running, the auto-scaling group of a worker app will comes in handy to automatically restart a new one should the old one die.

Summary

With worker apps, you now have a third kind of application available. Unlike single-instance

and load-balanced apps they do not have an Elastic IP or ELB as an entry point as this is simply not needed

for a number of scenarios including client-side load balancing, queue consumers and cron job-driven apps. In those

cases worker apps reduce both cost and complexity.

So go ahead and launch a new worker app today! And if you haven't signed up already, simply

log in with your GitHub id (it's free!) to start deploying

your JVM and Node.js applications effortlessly on AWS in minutes!