Logback and Log4J2 appender for AWS CloudWatch Logs

2016-12-09 by Axel Fontaine

Logging is one of the major diagnostic tools we have at our disposal for identifying issues with our applications. Traditionally logging consistent of writing lines of text to a file on the local filesystem. This creates a bunch of issues. Not only does one now have to start hunting through various backend servers to see which one actually processed the request we were looking for. Without forgetting of course that multiple requests from the same session may land on different backend instances. But much more severe is the fact that in a cloud world any persistent data on an instance dies together with the instance. And in the case of auto-scaling we don't even actively control anymore when certain instances are terminated.

To remedy this we need to look at a new solution: centralized logging. All logs get sent to a central service where they are aggregated, stored, and made searchable. AWS offers its own service for this called CloudWatch Logs.

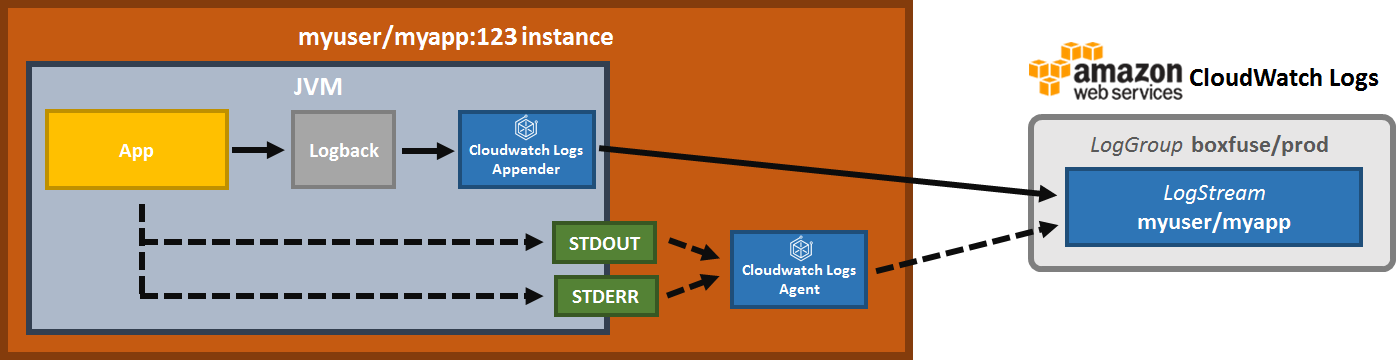

We first added support for it in October, by letting you create applications and indicate that you want your logs sent to CloudWatch Logs. Images of those applications automatically redirect both stdout and stderr using the open-source CloudCaptain CloudWatch Logs agent to the CloudWatch Logs LogStream of the application within the CloudWatch Logs LogGroup for the current environment. So if you haven't already, pause here and read that article first.

Today we are taking things further for JVM apps by offering you a brand-new open-source appender for both Logback and Log4J. This gives you much more fined-grained control by integrating natively into your preferred logging framework and letting you configure exactly how you want to route your logs.

Installation

To include the CloudCaptain Java log appender for AWS CloudWatch Logs in your application all you need to do is include the dependency in your build file.

Maven

Start by adding the CloudCaptain Maven repository to your list of repositories in your pom.xml:

<repositories>

<repository>

<id>central</id>

<url>https://repo1.maven.org/maven2/</url>

</repository>

<repository>

<id>boxfuse-repo</id>

<url>https://files.cloudcaptain.sh</url>

</repository>

</repositories>

Then add the dependency:

<dependency>

<groupId>com.boxfuse.cloudwatchlogs</groupId>

<artifactId>cloudwatchlogs-java-appender</artifactId>

<version>1.0.2.17</version>

</dependency>

Gradle

Start by adding the CloudCaptain Maven repository to your list of repositories in your build.gradle:

repositories {

mavenCentral()

maven {

url "https://files.cloudcaptain.sh"

}

}

Then add the dependency:

dependencies {

compile 'com.boxfuse.cloudwatchlogs:cloudwatchlogs-java-appender:1.0.2.17'

}

Usage

To use the appender you must add it to the configuration of your logging system.

Logback

Add the appender to your logback.xml file at the root of your classpath. In a Maven or Gradle project you can find it under src/main/resources:

<configuration>

<appender name="CloudCaptain-CloudwatchLogs" class="com.boxfuse.cloudwatchlogs.logback.CloudwatchLogsLogbackAppender"/>

<root level="debug">

<appender-ref ref="CloudCaptain-CloudwatchLogs" />

</root>

</configuration>

Log4J2

Add the appender to your log4j2.xml file at the root of your classpath. In a Maven or Gradle project you can find it under src/main/resources:

<?xml version="1.0" encoding="UTF-8"?>

<Configuration packages="com.boxfuse.cloudwatchlogs.log4j2">

<Appenders>

<CloudCaptain-CloudwatchLogs/>

</Appenders>

<Loggers>

<Root level="debug">

<AppenderRef ref="CloudCaptain-CloudwatchLogs"/>

</Root>

</Loggers>

</Configuration>

Code

And that's all the setup you need! You can now start using it from code as you normally would using either SLF4J or the api of your choice.

Logging a message like this:

private static final Logger LOGGER = LoggerFactory.getLogger(MyClazz.class);

...

LOGGER.info("My log message ...");

will now automatically sent it to CloudWatch Logs in structured form as a JSON document. The CloudCaptain CloudWatch Logs appender automatically adds critical metadata to the document as well so that it looks like this:

{

"image": "myuser/myapp:123",

"instance": "i-607b5ddc",

"level": "INFO",

"logger": "org.mycompany.myapp.MyClazz",

"message": "My log message ...",

"thread": "main"

}

This is very useful as this will allow us to query and filter the logs later.

Note that these are just the attributes sent automatically. You also have the possibility to fill in many other ones (such as current user, session id, request id, ...) via the MDC as described in the docs of the GitHub project.

Displaying the Logs

To display the logs simply open a new terminal and show the logs for your app in your desired environment:

> boxfuse logs myapp -env=prod

Live tailing

And if you want to follow along in real time you can use log tailing:

> boxfuse logs myapp -env=prod -logs.tail

And new logs will now automatically be displayed as soon as they are sent from the application to CloudWatch Logs.

Log filtering

This however can produce a lot of output. To aid finding the needle in the haystack, CloudCaptain also supports powerful log filtering, both on existing logs as well as during live tailing of a log stream.

You can make use of the attributes of the structured logs to filter them exactly the way you like. For example, to tail the logs live on the prod environment on AWS and only show the logs for a specific instance all you need to do is:

> boxfuse logs myapp -env=prod -logs.tail -logs.filter.instance=i-607b5ddc

And if you aren't quite sure what you are looking for you can also simply filter by time. For example to show all the logs created in the last minute (60 seconds) you could do:

> boxfuse logs myapp -env=prod -logs.tail -logs.filter.start=-60

This is a very powerful feature and there are many more filters which can all be combined. You can find all the details in the documentation.

Available today

The CloudCaptain Logback and Log4J2 Appender for CloudWatch Logs is available today. It is fully open-source (Apache 2.0 license) and you can browse the sources as well as read the detailed documentation on the GitHub project.

This feature is available at no charge to all customers.

So if you haven't already, sign up for your CloudCaptain account now (simply log in with your GitHub id, it's free), start deploying your application effortlessly to AWS today and enjoy the power of centralized logging, live tailing and filtering.